Large deviations

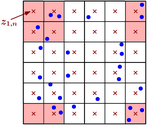

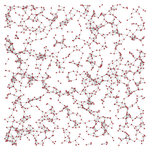

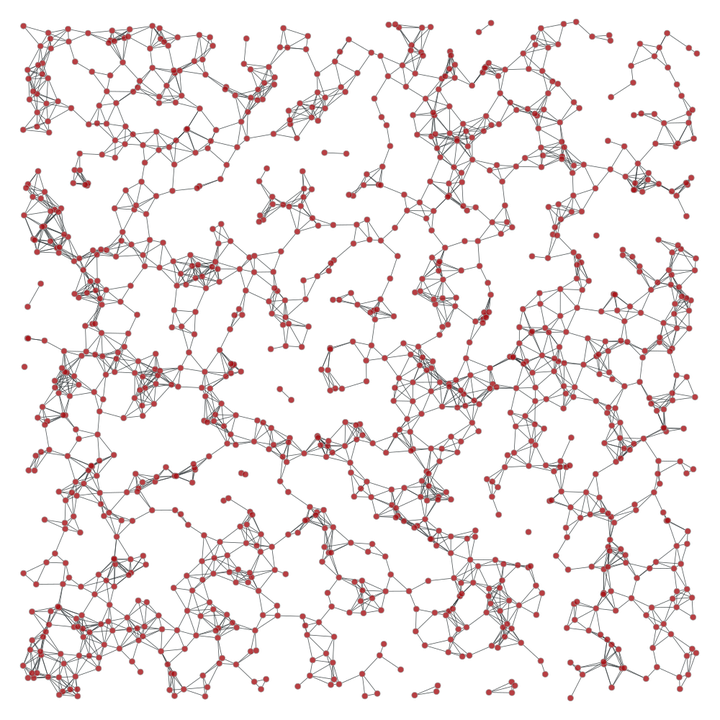

What is the probability that a random geometric graph in a sampling window has atypically few or atypically many edges or triangles? What are the sources leading to such rare events? These examples illustrate the core questions of large devations in geometric probability.

For combinatorially defined random graphs, developing appropriate tools to understand the precise nature of large deviations has been the starting point for a vibrant research direction. In the geometric setting, the process-level large deviation theory for the Poisson point process could explain large deviations in a variety of examples satisfying stabilization and exponential moment conditions. However, for instance for the edge count in the random geometric graph such moment conditions do not hold and the process-level theory breaks down. In analogy to sums of heavy-tailed random variables, the large-deviation behavior is coined by a fascinating condensation phenomenon.

Another example for condensation in the setting of stochastic geometry are upper large deviations of power-weighted distances in the

In a

paper with

B. Jahnel and

A. Tóbiás, we elucidate how sprinkling-based regularization makes it possible to determine lower large deviations for clique counts in the random geometric graph, intrinsic volumes of Poisson-Voronoi cells, as well as power-weighted edge lengths in the random geometric,

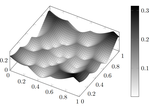

While the previous paragraphs illustrate the recent progress in the understanding of rare events in random networks, for a variety of applications it is not acceptable to encode only binary interactions in the form of edges. Here, simplicial complexes offer the alternative of modeling far more complex higher-order interactions. In a

paper with

T. Owada, we prove a large deviation principle for the point process associated to

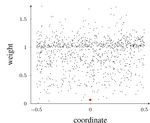

While the large deviation principle pertains an asymptotic regime, the insights gained through this investigation are also of value for specific bounded domains, most prominently when constructing efficient estimators for rare-event probabilities. In a paper with S.B. Moka, T. Taimre and D.P. Kroese, we develop conditional Monte Carlo algorithms for estimating rare-event probabilities for the random geometric graph. Building on conceptual insights from large-deviations theory, we illustrate that importance sampling using a Gibbsian point process can further substantially reduce the estimation variance.

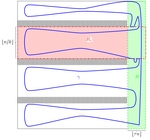

In 1934, Eugene Wigner introduced his landmark model of a gas of electrons in matter. A finite number of electrons are distributed in the Euclidean space and are subject to repulsive Coulomb forces. To confine the particles in a bounded domain, Wigner assumes the existence of a uniform background charge, approximating contributions from positive atoms. This ensures overall neutrality. The Feynman-Kac formalism makes it possible to express the quantum-mechanical statistics – i.e., Maxwell-Boltzmann, Bose-Einstein and Fermi-Dirac – in terms of expectation with respect to Brownian bridges subject to an interaction governed by a time-integration of the potential energy above.

Together with P. Jung and S. Jansen, we derive a level-3 large deviation principle for the associated Gibbsian process on an elongated strip (code).

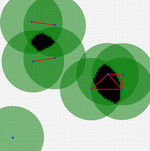

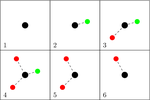

Spatial preferential attachment networks were introduced by

E. Jacob and

P. Mörters to illustrate how embedding a complex network in a Euclidean space can induce a positive clustering coefficient. More precisely, the nodes

In a paper with C. Mönch, we analyze shortest distances in this network and prove a large deviation principle for the neighborhood structure (code).